Ollama Chatbot Linux is your gateway to experiencing AI-driven conversations right on your Linux system. Designed with a user-friendly interface similar to ChatGPT, Ollama allows enthusiasts and developers alike to explore the capabilities of open-source large language models (LLMs). The process begins with Ollama installation, where you’ll set up the core tool necessary for running Ollama on your Linux machine. This easy guide will take you through the Ollama setup on Linux, ensuring you have everything from the right hardware to the necessary commands for a smooth experience. Once installed, downloading Ollama models to enhance your chatbot capabilities is just a few commands away!

The Ollama Chatbot for Linux serves as an innovative platform for engaging with AI through an intuitive web UI. This chatbot replicates the functionalities of prominent AI models like ChatGPT, providing an exceptional user experience. If you’re looking to harness the power of AI on your Linux system, this installation guide will walk you through the various steps required to get started, from setting up the essential software to downloading language models. The simplicity of running code snippets fosters an accessible environment for all users, whether you are a seasoned programmer or just beginning your journey in the AI landscape. By following the outlined instructions, you will be well-prepared to dive into the fascinating world of conversational AI.

How to Install Ollama on Linux

To begin the process of setting up Ollama on your Linux system, the first step is to install the Ollama tool itself. Ollama is an effective open-source package management tool specifically designed to handle large language models (LLMs). If you’re using a Linux operating system, you’re in luck because Ollama supports a wide variety of distributions, making installation straightforward. You can start this by opening your terminal, which you can do with the Ctrl + Alt + T shortcut. After launching the terminal, input the command below to initiate the installation script and set up Ollama:

“`bash

curl https://ollama.ai/install.sh | sh

“`

Running the installation command will trigger a process that automates the setup of Ollama on your machine. Be sure to follow any prompts that appear during the process; these instructions will help you ensure that Ollama is installed correctly. Additionally, it is advisable to review the script code to understand its operations fully. Once you have successfully installed Ollama, execute the `ollama` command in the terminal to check if the installation was successful. If the output doesn’t yield any response, consider running the command once more to fix potential issues.

Running Ollama in the Background

To interact with the Ollama Chatbot effectively, it’s essential to run the application in the background. This can sometimes be cumbersome if you’re directly using the terminal, as it occupies the space and limits multitasking capabilities. To alleviate this inconvenience, I have developed a Python-based background tool that allows you to operate the Ollama server seamlessly. This solution is particularly handy for those who want to manage their server without keeping the terminal open continually.

Before utilizing this Python tool, you must have the ‘git’ tool installed on your system. Depending on your Linux distribution, you can easily install Git using the respective command for your OS. For Ubuntu users, running `sudo apt install git` will suffice. Once Git is installed, clone the repository containing the daemon tool with:

“`bash

git clone https://github.com/soltros/ollama-mini-daemon.git

cd ollama-mini-daemon/

“`

Following that, grant execution permissions to the scripts and start the background server. This allows you to interact with the Ollama Chatbot efficiently while keeping the server processes running independently.

How to Download Ollama Models

Downloading models for Ollama is an integral part of maximizing your experience with the Chatbot. These models are essential for enabling the application to respond accurately to your inputs. Ollama provides a straightforward way to pull various LLM models, including popular options like ‘llama2’ and ‘orca2.’ Navigate to the ‘library’ section on the Ollama website to see the available models for download. To download a specific model, you can use the Ollama command followed by the model name. For example, to get the Llama2 model, execute:

“`bash

ollama pull llama2

“`

After you execute the command to download a model, it will be saved in the `~/.ollama/` directory on your Linux system. This allows you to manage your models easily, and you can pull additional models as needed without hassle. Remember that each model has unique attributes that could affect your interaction with the Ollama Chatbot, so select your models based on your preferred applications and requirements. Additionally, always check for updates periodically to ensure you’re using the latest models.

How to Install Chatbot Ollama on Linux

Installing Chatbot Ollama on your Linux system starts with the prerequisite of NodeJS. This software is crucial because the Ollama Chatbot UI absolutely depends on NodeJS to function properly. To install NodeJS, several commands are available depending on your Linux distribution. For instance, Ubuntu and Debian users can run:

“`bash

curl -sL https://deb.nodesource.com/setup | sudo bash –

sudo apt-get install -y nodejs

“`

After you have NodeJS installed, the next step is to clone the Ollama Chatbot repository from GitHub. You can do this with:

“`bash

git clone https://github.com/ivanfioravanti/chatbot-ollama.git

cd ~/chatbot-ollama/

“`

From there, navigate into the cloned directory and run `npm ci` to install all necessary dependencies. Finally, you can start the Chatbot UI with:

“`bash

npm run dev

“`

Upon successfully executing this command, open a web browser and go to `http://localhost:3000` to access the Chatbot.

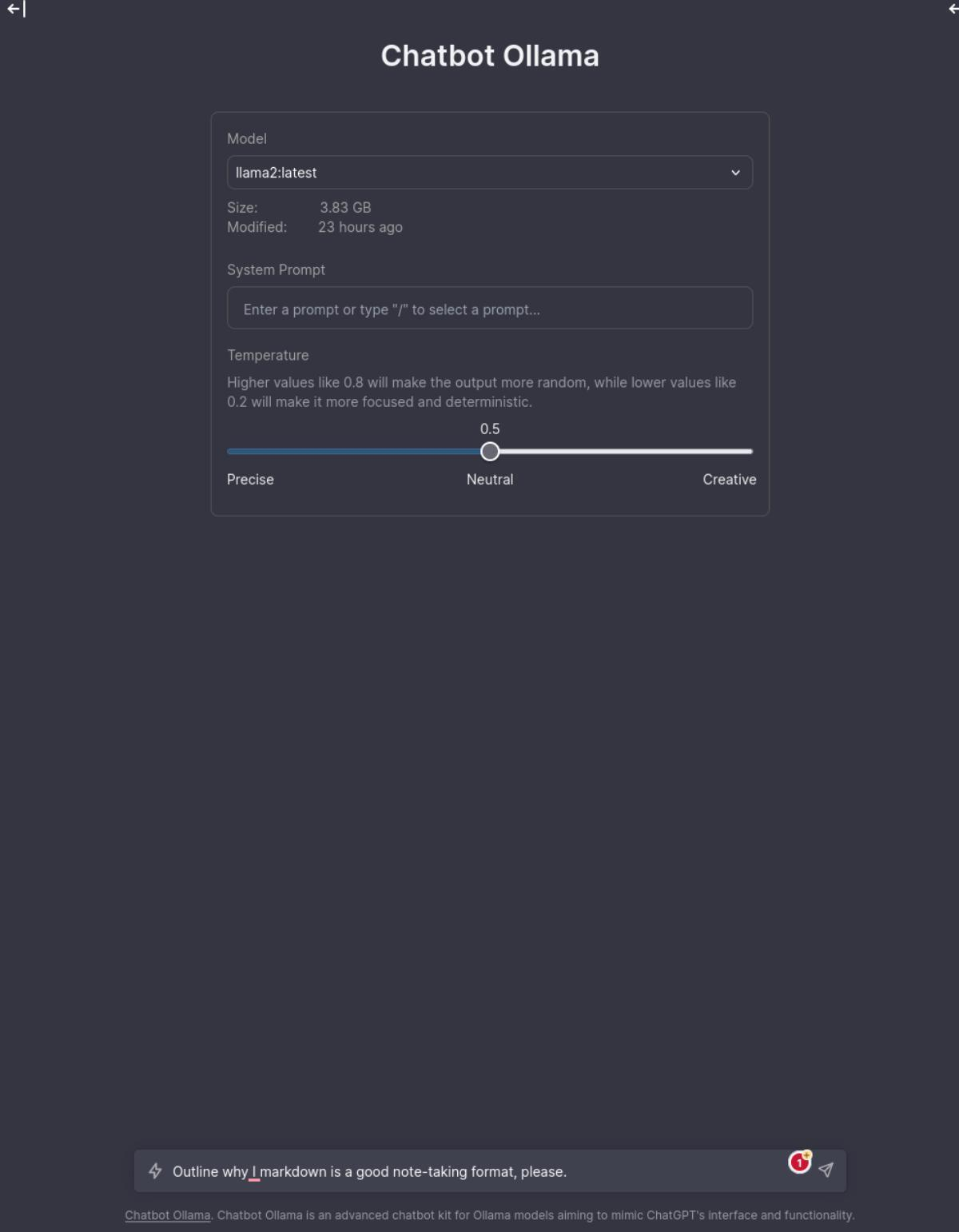

How to Use Ollama Chatbot on Linux

Using the Ollama Chatbot on Linux is quite intuitive once the setup is complete. Start by visiting `http://localhost:3000` in your preferred web browser. When you load the page, you will see the Chatbot interface where you can choose from a list of available models, such as Llama2 or Orca2. Selecting the right model is crucial as it affects the Chatbot’s behavior and responses to your queries.

In addition to selecting a model, users can also adjust the ‘temperature’ setting. The temperature parameter allows you to control the randomness of the generated responses. Higher temperature values produce more unexpected and creative outputs, while lower values ensure more accurate and predictable answers. After customizing your model and settings, simply type your question or prompt into the designated box and hit enter; the chatbot will generate responses in real-time, enhancing your interactive experience.

Frequently Asked Questions

What is the process for installing Ollama Chatbot on Linux?

To install the Ollama Chatbot on Linux, open a terminal and run the installation script with the command: `curl https://ollama.ai/install.sh | sh`. Follow the on-screen instructions to complete the setup.

How do I run Ollama in the background on my Linux system?

You can run Ollama in the background by installing Git and cloning a simple Python tool called Ollama Mini Daemon from GitHub. Execute the commands: `git clone https://github.com/soltros/ollama-mini-daemon.git` followed by `./daemon.py` to start Ollama in the background.

Where can I download Ollama models on Linux?

You can download Ollama models using the command `ollama pull <model_name>` in your Linux terminal. For example, to download the Llama2 model, use `ollama pull llama2`.

What are the system requirements for running Ollama Chatbot on Linux?

Running the Ollama Chatbot on Linux requires a modern Nvidia GPU for optimal performance, but you can also run it on a multi-core CPU if you don’t have an Nvidia GPU.

How do I install Node.js to set up Chatbot Ollama on Linux?

To install Node.js on Linux for Chatbot Ollama, run `curl -sL https://deb.nodesource.com/setup | sudo bash -`, then install it using `sudo apt-get install -y nodejs` for Ubuntu or respective commands for other distributions.

How can I access the Ollama Chatbot UI on my Linux machine?

After starting the Ollama Chatbot with the command `npm run dev`, you can access the UI by opening `http://localhost:3000` in your web browser.

What steps do I need to take to install Chatbot Ollama on Linux?

To install Chatbot Ollama, first install Node.js, then use `git clone https://github.com/ivanfioravanti/chatbot-ollama.git` to download the tool. Inside the cloned folder, run `npm ci` to install dependencies, and finally start the UI with `npm run dev`.

Can I run Ollama Chatbot without a GPU on Linux?

Yes, you can run Ollama Chatbot on Linux without a GPU by using a multi-core Intel or AMD CPU in CPU mode, although performance may be slower than with a GPU.

What command do I use to shut down the Ollama server running on Linux?

To shut down the Ollama server, execute the command `./shutdown_daemon.py` from the directory where the Ollama Mini Daemon script is located.

How can I change the model settings in Ollama Chatbot on Linux?

In the Ollama Chatbot UI, you can select your desired model and adjust the temperature settings using the interface provided before pressing Enter to submit your prompts.

| Step | Command/Instructions | Description |

|---|---|---|

| 1 | `curl https://ollama.ai/install.sh | sh` | Installs the Ollama tool, a package manager for LLMs. |

| 2 | `sudo apt install git` (Ubuntu) | Installs Git, required to clone repositories. |

| 3 | `git clone https://github.com/soltros/ollama-mini-daemon.git` | Downloads the Ollama daemon script. |

| 4 | `chmod +x *.py` | Makes the downloaded Python scripts executable. |

| 5 | `./daemon.py` | Runs Ollama in the background. |

| 6 | `ollama pull llama2` | Downloads the Llama2 model. |

| 7 | `ollama pull orca2` | Downloads the Orca2 model. |

| 8 | Node.js installation commands for your Linux distribution | Required for running the Chatbot UI. |

| 9 | `git clone https://github.com/ivanfioravanti/chatbot-ollama.git` | Downloads the Chatbot Ollama UI. |

| 10 | `npm ci` | Installs the required JavaScript dependencies. |

| 11 | `npm run dev` | Starts the Chatbot UI. |

Summary

Ollama Chatbot Linux offers users a powerful interface for interacting with large language models in a user-friendly environment reminiscent of ChatGPT. By following the steps outlined above, anyone can effortlessly set up Ollama Chatbot on their Linux system. Ensuring your hardware meets the requirements will maximize performance and usability, allowing you to explore all that AI has to offer.